We all in the geospatial domain have heard about data fusion/image fusion, a technique or way of combining the spectral information of a coarse-resolution image with the spatial resolution of a finer image. The result is a product that collegial combines the best characteristics of each of its components and contains more detailed information than each of the individual sources.

Hyperspectral imaging, or imaging spectroscopy, combines the power of digital imaging and spectroscopy. A hyperspectral camera acquires the light intensity (radiance) for a large number (typically a few tens to several hundred) of contiguous spectral bands. Every pixel in the image thus contains a continuous spectrum (in radiance or reflectance) and can be used to characterize the objects in the scene with great precision and detail.

Hyperspectral images obviously provide much more detailed information about the scene than a normal color camera or multispectral camera. It can help to distinguish urban features from vegetation and even to discriminate between different tree species. Urban planners can use image spectroscopy to detect objects covered with different roofing materials, streets, and open spaces.

LIDAR, which stands for Light Detection and Ranging, is a remote sensing method that uses light in the form of a pulsed laser to measure ranges (variable distances) to the Earth. These light pulses—combined with other data recorded by the airborne system— generate precise, three-dimensional information about the shape of the Earth and its surface characteristics. Light detection and ranging (LiDAR) data provide information on the 3D structure of trees such as canopy height and volume. Because of its high sampling rate, LiDAR data can be used to estimate these metrics at individual tree levels.

By combining LiDAR and hyperspectral data, you can:

- Create more accurate classification images of urban features

- Identify individual tree species

- Estimate forest biomass

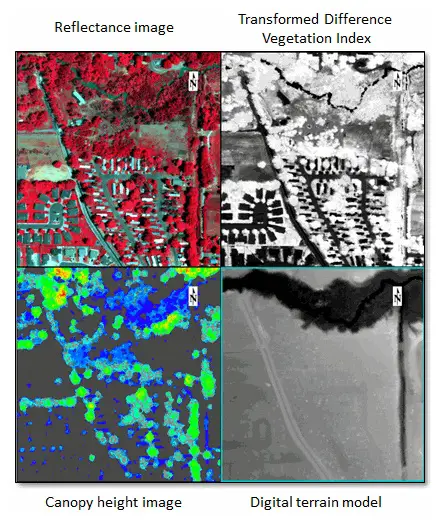

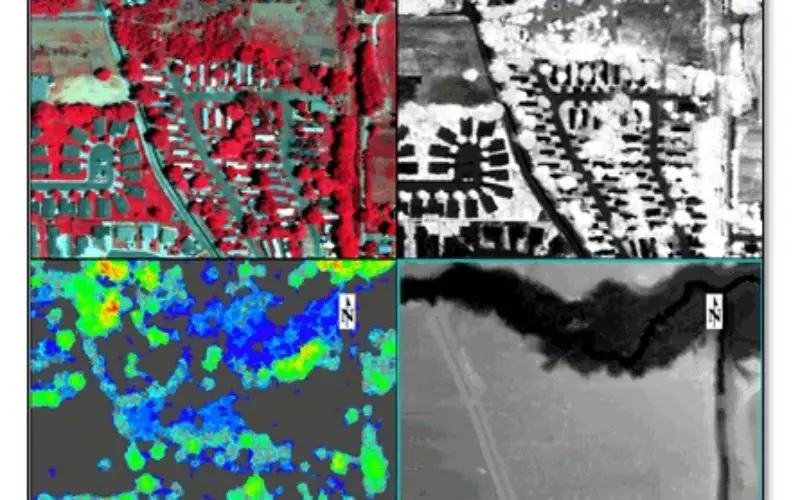

Here are a study results by Jason Wolfe, published as a blog post at Exelis. He acquired some sample LiDAR and hyperspectral imagery of the city of Fruita, Colorado from the National Ecological Observatory Network (NEON). These datasets work well with data fusion techniques:

- Airborne imaging spectrometer reflectance data in 428 bands extending from 380 to 2510 nanometers, with a spectral sampling of 5 nanometers. Spatial resolution is approximately 1 meter.

- Airborne LiDAR point cloud data, along with derived gridded products such as digital surface models, canopy height models, slope, aspect, etc.

Multi-view display in ENVI showing hyperspectral- and LiDAR-derived products

From the reflectance image I can derive raster products such as:

- Principal components

- Classification image created with the Support Vector Machine (SVM) algorithm and in-scene spectra (since ground-truth data are not yet available for this area)

- Spectral indices to indicate vegetation health or to separate man-made features from the rest of the scene

The next step would be to create a raster layer stack in ENVI that consists of various images derived from the LiDAR and hyperspectral data. This would need to co-register the images before creating a layer stack. Layer stacked data can be opened in ENVI Feature Extraction to extract objects that meet certain criteria. If wanted to extract buildings above a certain height, could create a rule that identifies a specific range of height values from the digital surface model along with low NDVI values to exclude vegetation. Or, could use the hyperspectral reflectance image along with the canopy height raster to identify different tree species.

Hi dear!

Thank for your website. I just want to know how can I do the fusion of lidar data with hyspectral image. How to make all both together?

Thanks in advance for your help