HoloToolKit’s Expanded the Spatial Mapping Capabilities of Microsoft HoloLens

In a recent case study published by Microsoft, Microsoft and Asobo (original code and created a library that encapsulates spatial mapping capability of HoloLens) have now open-sourced this code and made it available on HoloToolkit. All the source code is included, allowing to customize it to once needs. The code for the C++ solver has been wrapped into a UWP DLL and exposed to Unity with a drop-in prefab contained within HoloToolkit.

In a recent case study published by Microsoft, Microsoft and Asobo (original code and created a library that encapsulates spatial mapping capability of HoloLens) have now open-sourced this code and made it available on HoloToolkit. All the source code is included, allowing to customize it to once needs. The code for the C++ solver has been wrapped into a UWP DLL and exposed to Unity with a drop-in prefab contained within HoloToolkit.

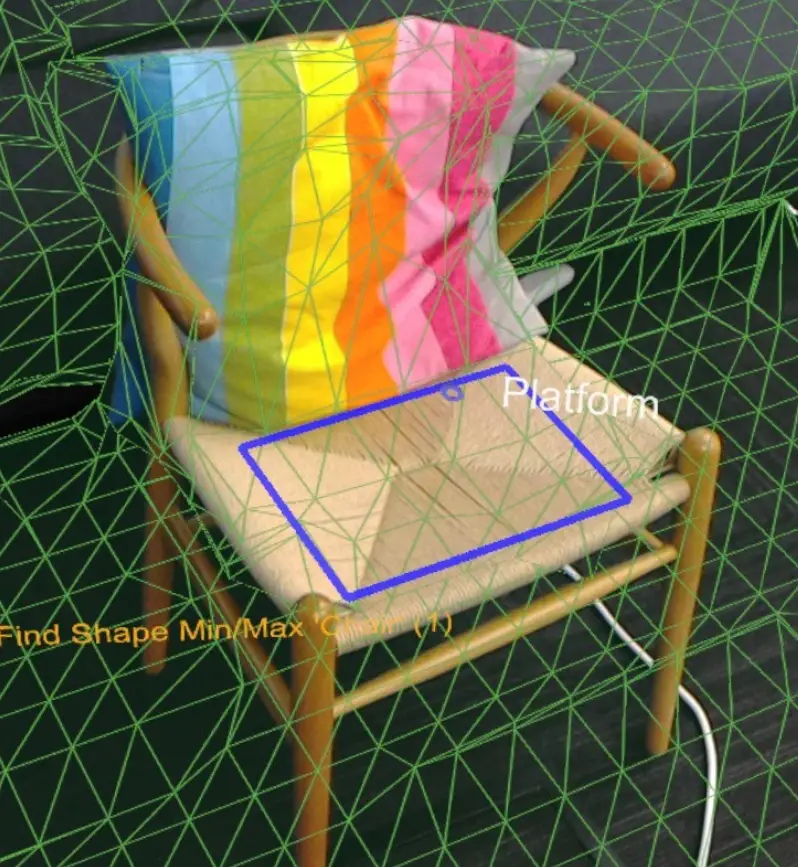

There are many useful queries included in the Unity sample that will allow to find empty spaces on walls, place objects on the ceiling or on large spaces on the floor, identify places for characters to sit, and a myriad of other spatial understanding queries.

While the spatial mapping solution provided by the HoloLens is designed to be generic enough to meet the needs of the entire gamut of problem spaces, the spatial understanding module was built to support the needs of two specific games. As such, its solution is structured around a specific process and set of assumptions:

- Fixed size playspace: The user specifies the maximum playspace size in the init call.

- One-time scan process: The process requires a discrete scanning phase where the user walks around, defining the playspace. Query functions will not function until after the scan has been finalized.

- User driven playspace “painting”: During the scanning phase, the user moves and looks around the plays pace, effectively painting the areas which should be included. The generated mesh is important to provide user feedback during this phase.

- Indoors home or office setup: The query functions are designed around flat surfaces and walls at right angles. This is a soft limitation. However, during the scanning phase, a primary axis analysis is completed to optimize the mesh tessellation along major and minor axis.

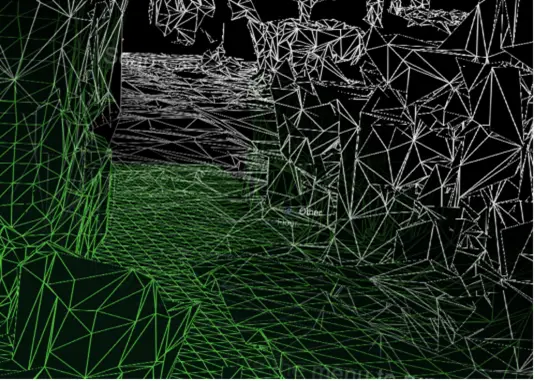

Spatial mapping mesh in white and understanding playspace mesh in green

Once the spatial understanding module is loaded, the first thing it will do the scan of space, so all the usable surfaces—such as the floor, ceiling, and walls—are identified and labeled. During the scanning process, when we, look around our room and “paint’ the areas that should be included in the scan.

The mesh seen during this phase is an important piece of visual feedback that lets users know what parts of the room are being scanned. The DLL for the spatial understanding module internally stores the playspace as a grid of 8cm sized voxel cubes. During the initial part of scanning, a primary component analysis is completed to determine the axes of the room. Internally, it stores its voxel space aligned to these axes. A mesh is generated approximately every second by extracting the isosurface from the voxel volume.

The blue rectangle highlights the results of the chair shape query.

he included SpatialUnderstanding.cs file manages the scanning phase process. It calls the following functions:

- SpatialUnderstanding_Init: Called once at the start.

- GeneratePlayspace_InitScan: Indicates that the scan phase should begin.

- GeneratePlayspace_UpdateScan_DynamicScan: Called each frame to update the scanning process. The camera position and orientation is passed in and is used for the playspace painting process, described above.

- GeneratePlayspace_RequestFinish: Called to finalize the playspace. This will use the areas “painted” during the scan phase to define and lock the playspace. The application can query statistics during the scanning phase as well as query the custom mesh for providing user feedback.

- Import_UnderstandingMesh: During scanning, the SpatialUnderstandingCustomMesh behavior provided by the module and placed on the understanding prefab will periodically query the custom mesh generated by the process. In addition, this is done once more after scanning has been finalized.

Click to read more about the HoloToolKit