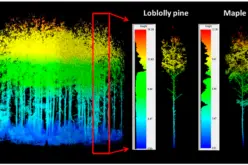

A LiDAR (Light Detection and Ranging) system beams light out and then precisely monitors the timing of reflections to map and track objects within its detection range. A LiDAR instrument principally consists of a laser, a scanner, and a specialized GPS receiver.

Unlike a camera that captures a two-dimensional (2D) rendition of three-dimensional (3D) scenes, a LiDAR system essentially captures full-fledged 3D reality of surrounding. A LiDAR systems can provide real-time data on even subtly changing positions and velocities of nearby objects.The 3D data collected by LiDAR is humongous.

The computation and processing of such large data require large memory and its time intensive. The data collected by self-driving car is also huge and needs very fast computation in real-time.

A typical 64-channel LiDAR sensor, for example, generates about 2 million points per second. State-of-the-art 3D models require 14x more processing at inference time than their 2D image counterparts because to the added spatial dimension. This means that, in order to navigate effectively, engineers first typically have to collapse the data into 2D – the side effect of this is that it introduces significant information loss.

On the other hand, increasing the robustness of these systems is also critical for self-driving car; however, even estimating the model’s uncertainty is very challenging due to the cost of sampling-based methods. Team of Researchers at MIT have been working on system for self-driving car that employs Machine Learning to eliminate the need for custom hand-tuning. Their new end-to-end framework can travel autonomously utilising only raw 3D point cloud data and low-resolution GPS maps like those seen on today’s smartphones.

End-to-end learning from raw LiDAR data is a computationally intensive process, since it involves giving the computer huge amounts of rich sensory information for learning how to steer. Because of this, the team had to actually design new deep learning components which leveraged modern GPU hardware more efficiently in order to control the vehicle in real-time.

In real world scenarios, due to poor weather conditions the cameras in self-driving cars, as well as LiDAR sensors capture erroneous data.

For example, picture yourself driving through a tunnel and then emerging into the sunlight – for a split-second, your eyes will likely have problems seeing because of the glare.

To handle this, the MIT team’s system can estimate how certain it is about any given prediction, and can therefore give more or less weight to that prediction in making its decisions. (In the case of emerging from a tunnel, it would essentially disregard any prediction that should not be trusted due to inaccurate sensor data.)

The team calls their approach “hybrid evidential fusion,” because it fuses the different control predictions together to arrive at its motion-planning choices.

In many respects, the system itself is a fusion of three previous MIT projects:

- MapLite, a hand-tuned framework for driving without high-definition 3D maps

- “variational end-to-end navigation,” a machine learning system that is trained using human driving data to learn how to navigate from scratch

- SPVNAS, an efficient 3D deep learning solution that optimizes neural architecture and inference library

As a next step, the team plans to continue to scale their system to increasing amounts of complexity in the real world, including adverse weather conditions and dynamic interaction with other vehicles.

Note – Liu and Amini co-wrote the new paper with MIT professors Song Han and Daniela Rus. Their other co-authors include research assistant Sibo Zhu and associate professor Sertac Karaman. The paper will be presented later this month at the International Conference on Robotics and Automation (ICRA).

Also Read –

Source – MIT Computer Science & Artificial Intelligence Lab