SLAM or Simultaneous Localization and Mapping is an algorithm that allows a device/robot to build its surrounding map and localize its location on the map at the same time.

SLAM algorithm is used in autonomous vehicles or robots that allow them to map unknown surroundings. The maps can be used to carry out a task such as a path planning and obstacle avoidance for autonomous vehicles.

In Short –

S+L+A+M = Simultaneous + Localization + and + Mapping

The origin of SLAM can be traced way back to the 1980s and 1990s when the robotics industry was building up robots for the industries. Engineers want to develop robots that can navigate on the workshop floor with-out collision or bumping on a wall.

Now days, SLAM is central to a range of indoor, outdoor, in-air and underwater applications for both manned and autonomous vehicles.

Let’s get more detailed information about SLAM, a disclaimer before that we are not going to deal with the mathematical aspects of the SLAM algorithm. For those interested in SLAM mathematical aspects, a link will be shared in the article.

What is SLAM?

SLAM uses devices/sensors to collects visible data (camera) and/or non-visible data (RADAR, SONAR, LiDAR) with basic positional data collected using Inertial Measurement Unit (IMU).

Together these sensors collect data and build a picture of the surrounding environment. The SLAM algorithm helps to best estimate the location/position within the surrounding environment.

Another interesting point is to notice here that the features (such as walls, floors, furniture, and pillars) and the position of the device is relative to each other. SLAM algorithm uses an iterative process to improve the estimated position with the new positional information. The higher the iteration process, the higher the positional accuracy. This cost more time for computation and high-configuration hardware with parallel processing capabilities of GPUs.

This may sound easy but requires huge mathematical calculations and processing to fuse data together from different sensors (camera, LiDAR, and IMU) and put them into a map with position information.

Let’s break down SLAM further and try to understand How SLAM Works?

How SLAM Works?

The entire working of SLAM can be broke down into Front-end data collection and Back-end data processing.

The front-end data collection of SLAM is of two types Visual SLAM and LiDAR SLAM.

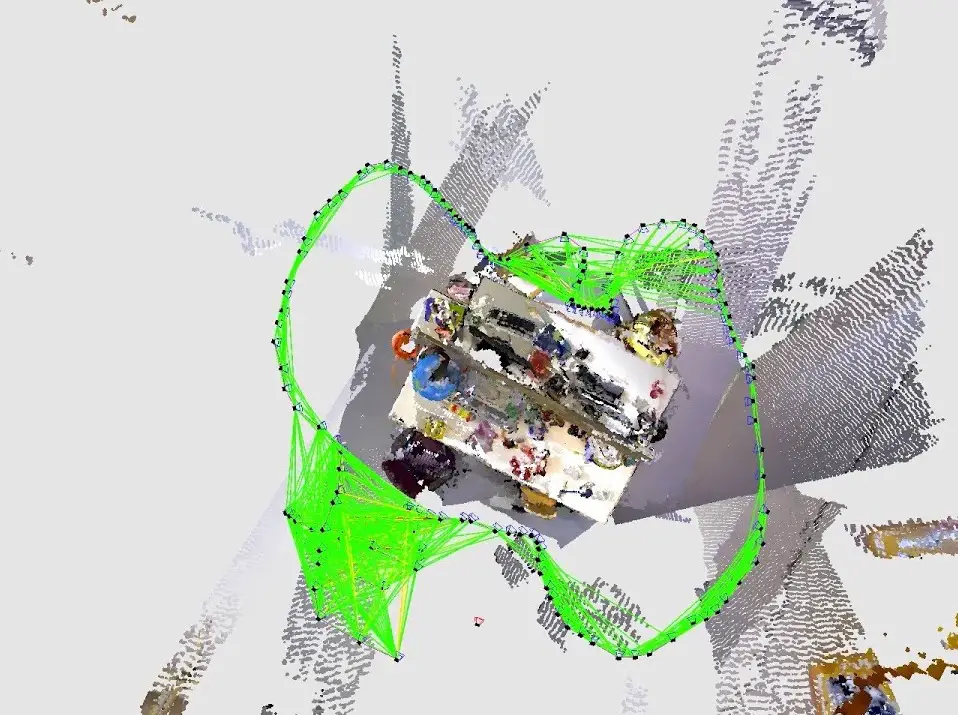

Visual SLAM (vSLAM) uses camera to acquire or collect imagery of the surrounding. Visual SLAM can use simple cameras (360 degree panoramic, wide angle and fish-eye camera), compound eye cameras (stereo and multi cameras), and RGB-D cameras (depth and ToF cameras).

A ToF (time-of-flight) camera is a range imaging camera system that employs time-of-flight techniques to resolve distance between the camera and the subject for each point of the image, by measuring the round trip time of an artificial light signal provided by a laser or an LED.

Visual SLAM implementation is generally low cost as they use relatively inexpensive cameras. Additionally, cameras provide a large volume of information, they can be used to detect a landmarks (previously measured positions). Landmark detection can also be combined with graph-based optimization, achieving flexibility in SLAM implementation.

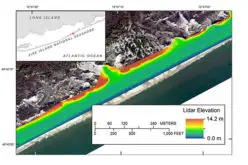

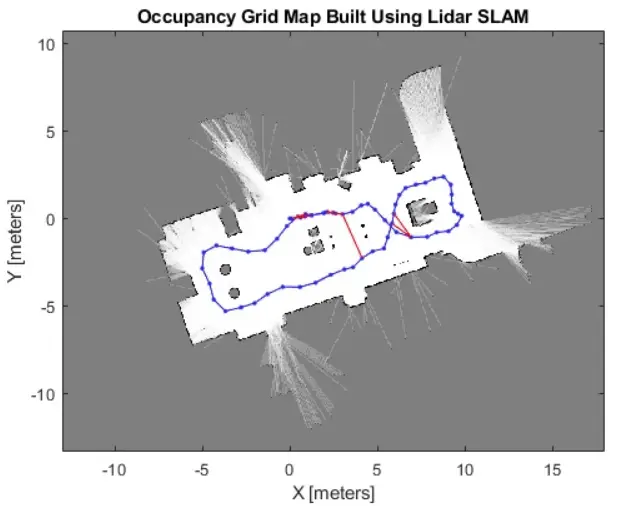

LiDAR SLAM implementation uses a laser sensor. Compare to Visual SLAM which used cameras, lasers are more precise and accurate. The high rate of data capture with more precision allows LiDAR sensors for use in high-speed applications such as moving vehicles such as self-driving cars and drones.

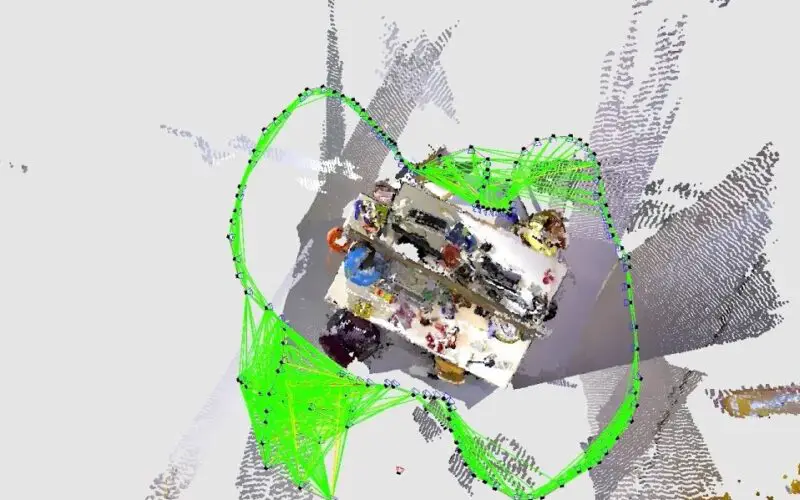

The output data of LiDAR sensors often called as point cloud data is available with 2D (x, y) or 3D (x, y, z) positional information.

The laser sensor point cloud provides high-precision distance measurements, and works very effectively for map construction with SLAM. Generally, movement is estimated sequentially by matching the point clouds. The calculated movement (travelled distance) is used for localizing the vehicle. For LiDAR point cloud matching, iterative closest point (ICP) and normal distributions transform (NDT) algorithms are used. 2D or 3D point cloud maps can be represented as a grid map or voxel map.

These days, many industries are now integrating 360-degree panoramic cameras with the LiDAR sensors. This allows them to deliver as-it is visualization of the survey/inspection site. This typically involves simultaneous capture of LiDAR point cloud data and 360-degree panoramic images. Later in back-end processing LiDAR data can be colorized using the information present in the panoramic images and thus renders as-it is view of the site.

Back-end data processing

As per the details mentioned in the MATLAB website, Visual SLAM algorithms can be broadly classified into two categories Sparse methods match feature points of images and use algorithms such as PTAM and ORB-SLAM. Dense methods use the overall brightness of images and use algorithms such as DTAM, LSD-SLAM, DSO, and SVO.

LiDAR point cloud matching generally requires high processing power, so it is necessary to optimize the processes to improve speed. Due to these challenges, localization for autonomous vehicles may involve fusing other measurement results such as wheel odometry, global navigation satellite system (GNSS), and IMU data. For applications such as warehouse robots, 2D LiDAR SLAM is commonly used, whereas SLAM using 3-D LiDAR point clouds can be used for UAVs and automated parking.

A research paper published by Takafumi Taketomi et. al. titled “Visual SLAM algorithms: a survey from 2010 to 2016” is a perfect source of information regarding various algorithms related to Visual SLAM.

In the year 2016, Google has also launched an open-source algorithm Cartographer, a real-time simultaneous localization and mapping (SLAM) library in 2D and 3D with ROS support.

Why SLAM Matters?

As mentioned at the start of the article that the origin of SLAM begins when engineers where finding solutions for robots for indoor positioning.

Hopefully, you have understood the reason as of now. If not, don’t be dishearten.

SLAM is very useful in locations where there is no or very limited availability of GNSS data for positioning. Since SLAMs are capable of mapping and positioning in the environment without an additional source of position information, make it perfect for indoor mapping.

Source –

- MATLAB

- NVIDIA

- GeoSLAM

- http://ais.informatik.uni-freiburg.de/teaching/ss12/robotics/slides/12-slam.pdf

You may be interested in reading – Apple iPad Pro LiDAR scanner – Why and How it Works?